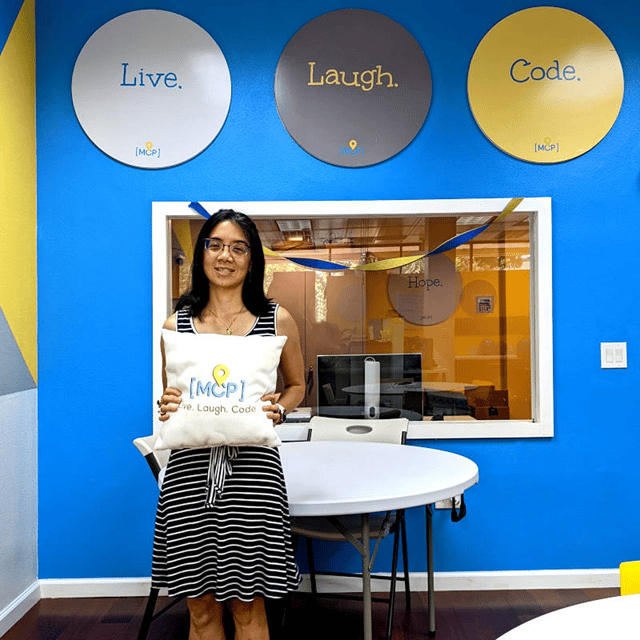

The K12 Engineering Education Podcast

Promoting education in engineering and design for all ages. Learn more and read transcripts at www.k12engineering.net. Produced by Pius Wong, engineer, of Pios Labs (www.pioslabs.com). This podcast is for educators, engineers, entrepreneurs, and parents interested in bringing engineering to younger ages. Listen to real conversations among various professionals in the engineering education space, as we try to find better ways to educate and inspire kids in engineering thinking. For episode transcripts and more information, visit: www.k12engineering.net Topics include overcoming institutional barriers to engineering and STEM in K12, cool ways to teach engineering, equity in access to engineering, industry needs for engineers, strategies for training teachers, "edtech" solutions for K12 classrooms, curriculum and pedagogy reviews, and research on how kids learn engineering knowledge and skills. Thanks for listening!